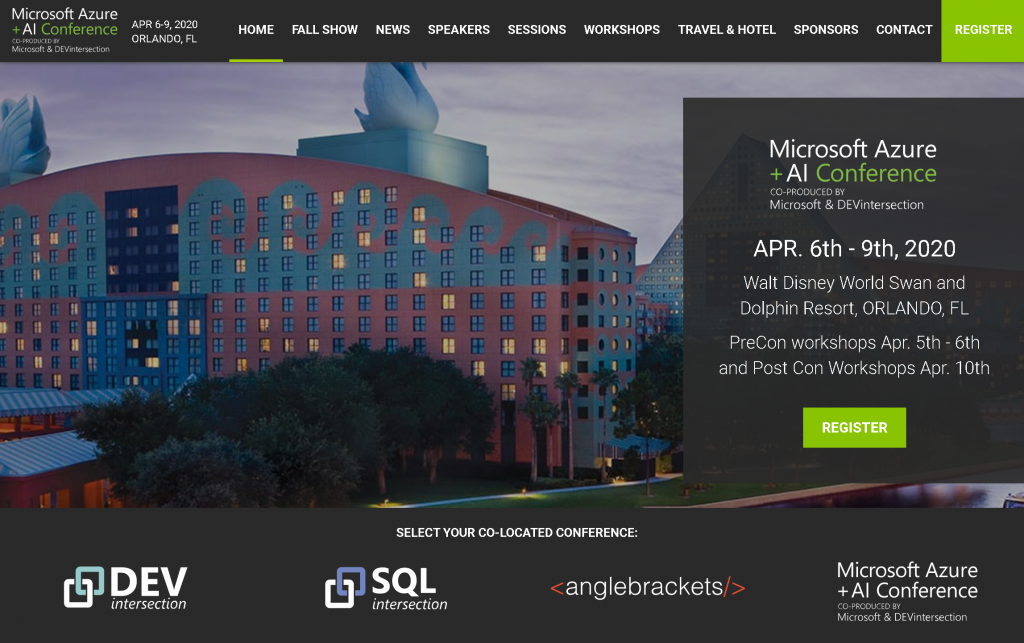

Barring any issues with Covid-19, I plan to speak at Azure+AI Conference on April 6th - 9th, 2020 Walt Disney World Swan and Dolphin Resort, Orlando.

My talk abstracts follow:

Learning from Feedback – Explore Reinforcement Learning for Next Best Action with Azure Personalizer

Reinforcement Learning is an approach to machine learning that learns behaviors by getting feedback from its use. Azure cognitive service “Personalizer” allows an application to choose the best experience to show to your users, learning from their real-time behavior.

In this session you will learn about reinforcement learning using Azure Personalizer, a cloud-based API service that helps application choose the best, content item to show each user. Personalizer uses reinforcement learning to select the best item (action) based on collective behavior and reward scores across all users. Actions are the content items, such as news articles, specific movies, or products to choose from. The service selects the best item, from content items, based on collective real-time information you provide about content and context.

In this hands on session, you will learn about reinforcement learning concepts such as exploration, Features, action, and context, active and inactive events, rewards, improving loop with offline evaluations, active and inactive events, as well as scalability and performance.

Fair and Ethical AI – Fighting Bias with InterpretML

Most real datasets have hidden biases. Being able to detect the impact of the bias in the data on the model, and then to repair the model, is critical if we are going to deploy machine learning in applications that affect people’s health, welfare, and social opportunities. This requires models that are intelligible.

Microsoft InterpretML, is an open sourced python package for training interpretable models and explaining blackbox systems using Explainable Boosting Machine (EBM). InterpretML is made to help developers experiment with ways to introduce explanations of the output of AI systems. InterpretML is currently in alpha and available on GitHub. In this talk we will review the InterpretML and draw parallels with LIME, ELI5, and SHAP. InterpretML implements a number of intelligible models—including Explainable Boosting Machine (an improvement over generalized additive models ), and several methods for generating explanations of the behavior of black-box models or their individual predictions.